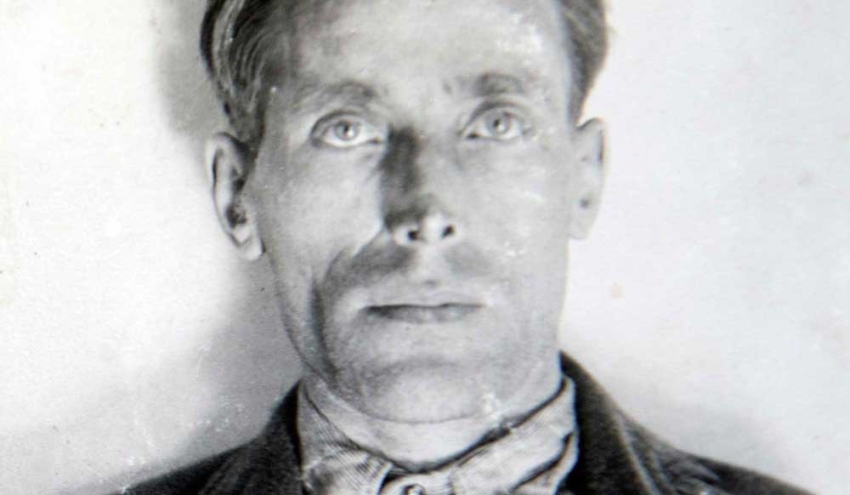

Remembering the Life and Music of Labor Agitator Joe Hill, Who Was Executed 100 Years Ago Today

He was killed by firing squad in the state of Utah on November 19, 1915.

Joe Hill saw his music as a weapon in the class war, composing songs to be sung on soapboxes, picket lines or in jail. And 100 years ago today, the forces of capital and the state of Utah executed him.

Chicago musician and scholar Bucky Halker is honoring the centennial with a CD of new interpretations of Hill’s music, “Anywhere But Utah—The Songs of Joe Hill,” taking his title from Hill’s dying wish that his remains be transported out of state because he didn’t want “to be found dead in Utah.” The album includes such familiar Hill classics as "The Preacher and the Slave," "There is Power in a Union" and "Rebel Girl" as well as some surprising obscurities, like the wistfully romantic "Come and Take a Joy-Ride in My Aeroplane."

Born Joel Hagglund in Sweden, Hill immigrated to the United States in 1902, changing his name to Joseph Hillstrom, which would eventually be shortened to Joe Hill. Working his way across the country, Hill became politicized, eventually joining the Industrial Workers of the World. Popularly known as the Wobblies, the IWW sought to organize those workers more mainstream unions avoided—the unskilled, migrants, immigrants, minorities—in an effort to combine the entire working class into One Big Union.

As a Wobbly, Hill was active in free speech fights in Fresno and San Diego, a strike of railroad construction workers in British Columbia and even fought in the Mexican Revolution.

In 1914, Hill was arrested in Salt Lake City and charged with killing a storekeeper, allegedly in a botched robbery. Despite the flimsy nature of the evidence, Hill was convicted and sentenced to death, with the prosecutor urging conviction as much on the basis of Hill’s IWW membership as any putative evidence of his involvement in the crime. An international amnesty movement pressed for a new trial, but the Utah governor refused and Hill was executed by firing squad on November 19, 1915. In a final message to IWW General Secretary Bill Haywood, Hill urged, “Don’t waste any time in mourning—organize.”

Since his death, Hill has been immortalized in a wide variety of cultural expression, including poetry by Kenneth Patchen, fiction by Wallace Stegner, and a song by Alfred Hayes and Earl Robinson, popularized by Paul Robeson, promising “where workingmen are out on strike, Joe Hill is at their side.”

I talked to Halker about Hill’s music, politics and legacy. Halker is the author of the seminal work on Gilded Age labor music, For Democracy, Workers, and God: Labor Song-Poems and Labor Protest, 1865-95, and has previously released a tribute album to Woody Guthrie.

For one who was not a native English speaker, Joe Hill had a keen understanding of American slang, humor, and various folk and popular song forms. How did he become such a master of the American vernacular? How does he compare with other folksingers closely associated with insurgent movements, such as Woody Guthrie?

Hill is part of a long tradition of “organic” intellectuals in the USA. He was also just plain smart, which you can tell from reading his lyrics and other writing. He was self-educated, with an appetite for ideas. And remember that the labor movement was filled with men and women of this sort, dating to the early 19th century. Unions, of course, often had their own libraries, so workers could check out literature related to everything from poetry to economics. Also, cheap pamphlets on the issues of the day, including Marxism, were extremely common in the years after the Civil War and well into the 20th century. If you look at the people who wrote labor music and poetry, they typically share this kind of background.

Hill and Guthrie also share a tremendous skill in the realm of vernacular speech. He mastered all this hobo and Wobbly slang of the era and the latest music-hall, vaudeville lyrics of the day. Also, Hill’s work is filled with humor, irony and sarcasm—hardly easy skills to gain in your second language. No doubt he picked all this up from hobos, labor activists, and Wobblies, but I also believe his ear for music helped him in this effort.

Hill had some musical training and a passion for music that is obvious in his lyrical approach. You can tell from his lyrics that he paid close attention to the musical hall and Tin Pan Alley writers of the day. Most of them were also immigrants or children of immigrants and were very skilled at slang, lyrical twists and clever use of idioms. Indeed, Hill’s lyrics and choice of tunes have much more in common with music hall composers than the folk or county models that Guthrie and others made use of in the years of the great labor uprising of the 1930s and which became the template for labor songsters thereafter.

Hill and other Wobbly bards and writers should get some credit for their use of sarcasm and irony in the development of American literature. They had sharp wits and tongues that worked deftly and quickly, which only pissed off the lunkhead bosses, the law and the ruling elite even more. The authorities and their lackeys dislike radicals even more when they’re much smarter than they are.

What was the role of music in the creation of the Wobbly movement culture?

Music was a centerpiece of the Wobbly “movement culture.” However, I wouldn’t say this came into existence with the IWW. Earlier, the abolitionists and the Gilded Age labor movement made singing, songwriting, poetry and other forms of writing a key part of their efforts. Coal miners and Jewish textile workers had already developed a strong working-class poetic and musical tradition, as did the Knights of Labor. So Joe Hill and Woody Guthrie were standing on big shoulders.

Having said that, the IWW took the music and poetry to new heights and cleverly used singing and chanting as a way to garner attention from workers, the media, and the authorities. Fifty workers singing makes a lot more noise at a rally or in a jail cell than one speaker on a soapbox or one person ranting in the joint.

What kinds of considerations did you take into account in updating Hill’s music?

I quickly decided I wanted to record a couple of the sentimental love songs because that part of Hill’s personality had been neglected. They read like old vaudeville and Tin Pan Alley lyrics, so I worked on melodies and chord changes that were common to those types of pieces. I also decided that on songs like “Ta Ra Ra Boom De Ay” and “It’s A Long Way Down to the Soupline” that I wanted to use a brass band that had the flavor of the music hall that Hill was leaning on. I hoped to make it a bit like a drunken Salvation Army band in the process, which fit the brass band sound anyway.

I knew he’d been to Hawaii and the Pacific Islands too, so I decided to do a couple songs with the ukulele at the center, which was appropriate given some things I’d read on Hill and the music he heard on that trip (plus the ukulele had become popular at the time).

I wanted to make a record that Hill would like. That was my priority from the beginning. I don’t think he’d like a straight folk revival, strumming acoustic guitar approach, as that has nothing to do with most of his material. He played the piano and the fiddle, after all. The folk revivalists did a great service by keeping Hill’s work in circulation, but trying to keep him in that small musical box is way off the mark. So, I borrowed from vaudeville and the music hall, piano blues and early jazz, alt-country, swing, punk and gospel.

I think Joe would be very happy with this recording—more so than what’s preceded.

What sorts of musical choices did Hill make? What kinds of influences did he draw on?

Hill came from a music-loving family and he also had some musical training as a child before his dad died and the family fell on hard times. He heard a lot of religious music, as his family were strong Lutherans and sometimes attended Salvation Army gatherings. Since hymns were well known, even across sectarian religious (and political) lines, hymn tunes were often used by labor songwriters going back to the mid-19th century. Hill’s use of “In the Sweet By and By” as the tune for “The Preacher and the Slave” was very much in a labor tradition, as was “Nearer My God to Thee” for his “Nearer My Job to Thee.”

Hill also wrote his own music for some of his songs—for his classic “Rebel Girl,” for example. And though he leaned heavily for the music of the “Internationale” for his “Workers of the World Awaken,” he did include clear pieces of his own work in writing that one, too.

But most often he drew on the vaudeville, music hall, early Tin Pan Alley songs that were popular with workers at the time. For “Ta Ra Ra Boom De Ay” he took the tune from a music hall hit by the same name. For “Scissor Bill” he took the tune from “Steamboat Bill,” which was a huge hit at the time and a best-selling early recording.

With all his tune choices, he was like other working-class writers and had the same goal—use tunes that workers knew already for labor songs and then they’d be easy for workers to sing.

Can you give the background of some of the other songs?

“Der Chief of Fresno” grew out of the IWW’s free speech campaign. Fresno was a place where the police were notably confrontational. Hill added his voice to the mix with a piece that appears to have been a chant of sorts. That’s why I added the multiple voices on the words “der chief” whenever it comes around. I like the use of the German “Der” in the title, as if the chief might make a good member of the oppressive Prussian army.

“Stung Right” represents the strong anti-military bent of the IWW, even before the outbreak of World War I. Many immigrant workers had already come from regions of the world where they were drafted and made to fight the battles for the ruling class and were determined to stay out of future such wars. What’s more, many working-class groups saw war as a senseless ruling class fight that only pitted workers against each other. Nationalism was seen as suspect.

Little wonder, then, that after the Spanish-American War in 1898, and the needless slaughter it entailed, anti-war and anti-military sentiments found welcome ears. Hill was aware that workers also signed up with the military when their economic situations were difficult. At least they gave you a bit of cash and some food in the army. In this piece, he’s warning workers not to fall for it

“Rebel Girl” is another of his best efforts. Hill wrote it himself for Elizabeth Gurley Flynn. Hill followed her career closely and admired her work on behalf of labor. They frequently corresponded while Hill was in prison. He even wrote a cute little song for her son called “Bronco Buster Flynn.” Flynn had visited Hill while he was awaiting execution and sent him a photo of her son Buster.

One song that struck me was "Come Take a Joy Ride in My Aeroplane." As you say in your liner notes, that seems to represent a "romantic and carefree side" that we don't typically associate with Hill.

There were three of these romantic, sentimental songs that Hill wrote, all of which were discovered after his arrest and none of which had music or tunes for them. Of course, there may be more, but we haven’t yet found those. They weren’t typical of Hill’s writing, which is generally focused on labor and political issues. But you can find some of this same sentiment in his letters, so it clearly was a key component of who Joe Hill was.

Frankly, I think historians and musicians have missed the boat in not addressing this romantic impulse. I suppose it seems counter to our image of the left-wing radical. But hell—I’d rather hang out with a person with strong romantic tendencies and a left-wing leaning personality, than some dour old sourpuss like Marx or Lenin, wouldn’t you? I also think within the IWW there was a strong sense of romance about the world. This can be found clearly in Wobbly writers like Haywire Mac or Ralph Chaplin. Some of this could be channeled toward a utopian impulse, as in the classic IWW song “Big Rock Candy Mountain.”

Hill just gave it a more personal twist. Why shouldn’t he want to fall in love and be carried away for a while in the reverie of romance? Don’t we all? Here’s a guy who went to work at age nine, contracted tuberculosis, went on the tramp to survive and worked an endless stream of low-paying jobs. Why not dream of taking flight above this dreary earth with your gal and soar above the troubles below? Sounds like fun to me.

What is it about Hill that makes his legacy resonate so widely?

Obviously, the injustice of his arrest, trial, and execution continues to resonate, especially when a day doesn’t pass without some prisoner being released from prison after new evidence or DNA tests exonerated him or her.

Beyond that obvious point, I think there are many people who hear his songs and immediately sense that the issues raised by Hill and other Wobbly bards remain important to our national discussion, including decent wages and working conditions, immigrant rights, discrimination based on race, the oppression of women, the right to form a union and the right to free speech.

I have to admit, however, that I’m often bewildered by conservative labor leaders in the USA who pull out Hill’s legacy when it’s convenient and make positive comments about him. If he were around today, they’d throw him out of their conventions in a minute.

I also think there’s considerable appeal to Hill’s personal demeanor throughout the trial. He died a heroic, noble death—something few people can claim for their lives. He gave his life for the cause, and his trial and execution played out in the international media of the day. He’s a romantic character of the type that is more common in early movies than in reality.